Preevy is Livecycle’s new OSS tool, offering simple and quick Preview Environments for Docker Compose apps. This is the second in a series of posts about designing Preevy’s proxy service.

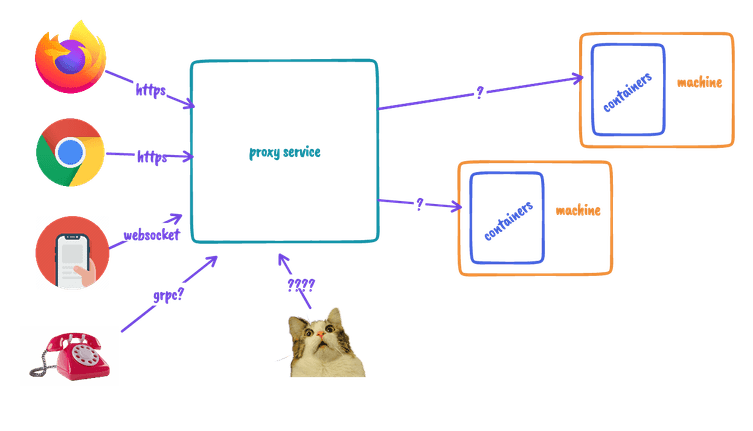

Reminder: Preevy’s proxy service is the public endpoint for the services running on the user’s Preview Environments. Environments are VMs provisioned by Preevy’s CLI on the user’s cloud provider account.

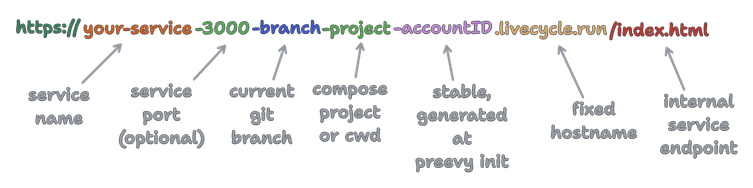

In the previous post, we discussed a URL mapping scheme used by the proxy to identify the request target: the specific preview environment, service and port.

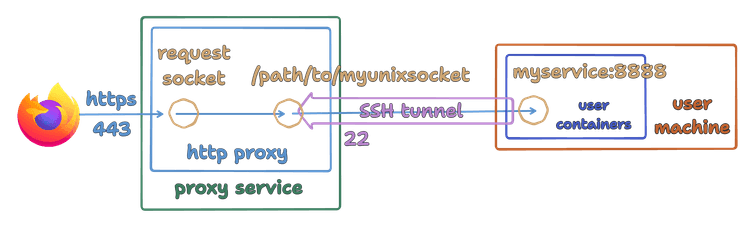

Once the target has been identified for a request, the proxy should route it to the user’s machine. Then, the request should arrive at the specific container running on that machine.

So the next question we had was: How will the proxy send requests to the user’s machines?

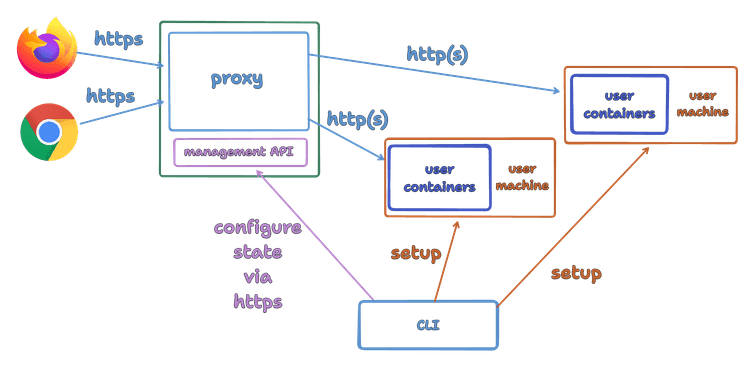

Because preview environments are ephemeral and dynamic, the mapping between a preview environment ID and a specific machine is a mutable state, which needs to reflect the current set of provisioned machines, and accessed by the proxy per request. How should this state be established and updated?

The first idea we had was to have a separate “management API” endpoint in the proxy. The CLI, which creates and removes the machines, can use this API to update the proxy state.

Updating the state from a separate process (the CLI) means that the state can easily go out of sync. What if the machines themselves update the state via the management API?

Having the machines themselves report their state means there’s less chance of the state going out of sync.

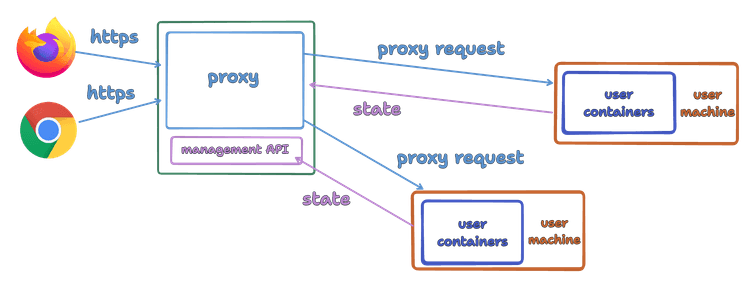

But we can do better: What if the fact that the proxy and machine are connected becomes the state itself? To do this, we need to reverse the connection direction: Machines, when created, will initiate connections to the proxy server, and use it to tunnel the incoming requests into the containers. The incoming connection to the proxy can then become the state in the proxy.

How do we create tunneling connections? Is there some protocol which includes tunneling and multiplexing, or should we create our own?

SSH can create remote or local tunnels. It can also multiplex those tunnels over a single socket. Sounds useful!

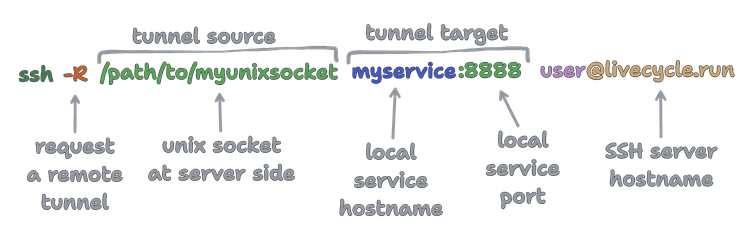

So, our proxy service is now two services, listening on two ports: on port 443 is the web server, and on port 22 is a SSH server. The user machine acts as a SSH client, which basically runs the command:

livecycle.run is the hostname of the free proxy server hosted by Livecycle. You can also host it yourself!

Once the SSH tunnel is established, requests from the web server are proxied to a local unix socket, which is tunneled back to the SSH client on the user’s machine, and then to the local service port inside the container network.

SSH does multiplexing out of the box, so every new TCP socket created by the requesting client (web browser, in this example) is tunneled on the single TCP socket of the SSH connection. This is another advantage of using SSH - with the previous option, every TCP socket from the requesting client would require a new connection to the user machine (or we would have to implement the multiplexing ourselves).

Another nice advantage of tunneling is that the user machine does not need to listen on a web port - HTTP or HTTPS, and in the latter case, does not require a certificate. And there’s no need to configure the cloud provider’s firewall rules to open the web port.

Although we started with the default OpenSSH Client implementation, we ended up coding both the SSH client and SSH server in native Node.js with the excellent ssh2 package. We kept the server side compatible with the standard OpenSSH Client command-line shown above, though, to make it easy to debug (and maybe use in the future).

Bonus credits: SSH over TLS

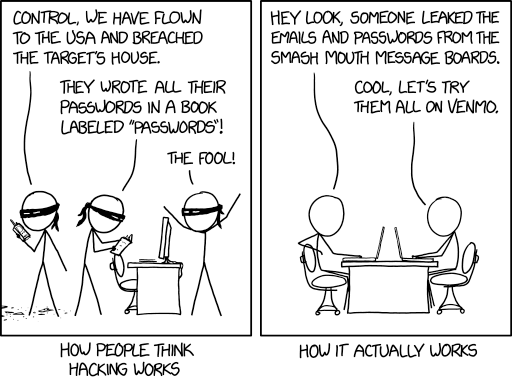

While developing the SSH tunnel feature, we noticed some unauthorized login attempts on the SSH server. These were documented in the SSH log file at /var/log/auth.log:

Apr 1 20:55:37 ip-172-26-8-15 sshd[2376417]: Invalid user admin from 161.35.199.4 port 34442

Apr 1 20:55:37 ip-172-26-8-15 sshd[2376417]: Connection closed by invalid user admin 161.35.199.4 port 34442 [preauth]

Apr 1 20:55:56 ip-172-26-8-15 sshd[2376424]: Invalid user admin from 161.35.199.4 port 35226

Apr 1 20:55:56 ip-172-26-8-15 sshd[2376424]: Connection closed by invalid user admin 161.35.199.4 port 35226 [preauth]

Apr 1 20:56:03 ip-172-26-8-15 sshd[2376426]: Invalid user admin from 161.35.199.4 port 54894

Apr 1 20:56:03 ip-172-26-8-15 sshd[2376426]: Connection closed by invalid user admin 161.35.199.4 port 54894 [preauth]

Apr 1 20:56:09 ip-172-26-8-15 sshd[2376428]: Invalid user centos from 161.35.199.4 port 54906

Apr 1 20:56:09 ip-172-26-8-15 sshd[2376428]: Connection closed by invalid user centos 161.35.199.4 port 54906 [preauth]It looks like some script trying default username/password pairs - some are obvious choices like admin, guest and postgres, and some are quite weird like amanda, asdfgasdfg, and reseller02. You can view the entire list, gathered in about two weeks, here.

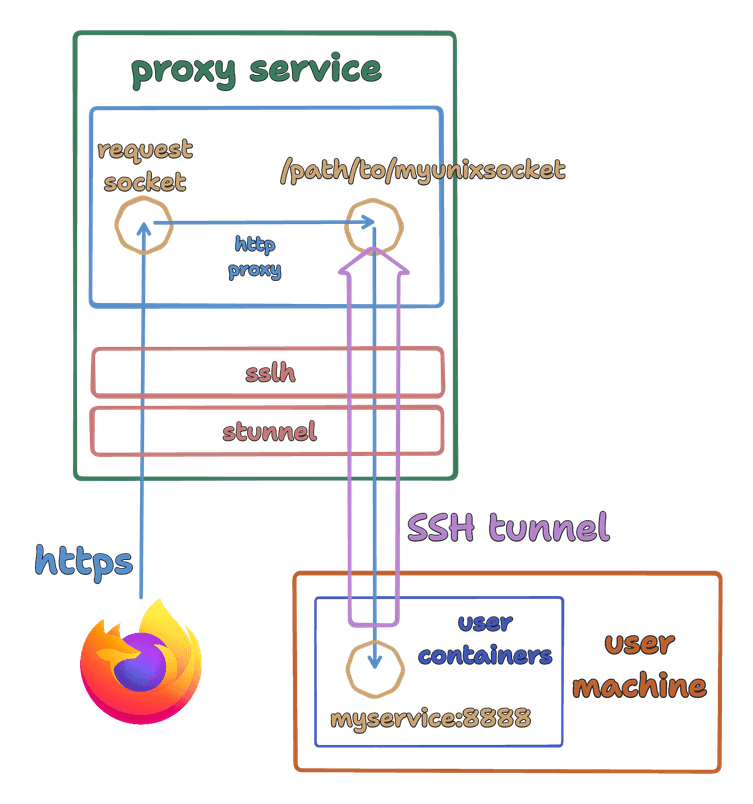

This got us thinking about hiding our SSH port. We could use a non-standard port instead of 22. But how about sharing the HTTPS port - 443? Other than providing false security via obscurity, this also has the benefit of re-using our TLS certificates for identifying the SSH host, which is actual security!

Sharing the 443 port with SSH requires identifying and separating SSH traffic from HTTPs. We ended up following this helpful guide which also discusses deploying on Kubernetes with Traefik; stunnel is used to verify and unwrap the TLS headers, and sslh is used to split the traffic between the HTTP proxy and the SSH server. You can see the result at the Preevy repo here, if you want to use it for hosting the Preevy proxy server yourself.

Sharing the port is optional - both the proxy server and CLI support “native” SSH on a separate port as well as SSH over TLS.

In the next post, we’ll discuss routing inside the user machine - what makes a request from the proxy reach the specific service container.