Preevy is for Previews

Preevy is Livecycle’s new Open Source offering. If you’re a Docker Compose user, Preevy can deploy your app to a public, ephemeral environment, also known as a Preview Environment, for every code change you make.

Preview environments are a non-production version of your app, including all its services, accessible not only to you, but to the rest of your team, including designers, product people and other developers. Preview environments can be used to test, validate and review changes made in a pull request, before merging it.

You can read more about Preevy at the official docs site.

Developing Preevy was an interesting technical challenge for us. We thought sharing our winding journey in a series of posts might be interesting (assuming you find these sorts of things interesting, of course). This is the first of a series of posts about the Preevy proxy service.

Proxy is Preevy’s gateway

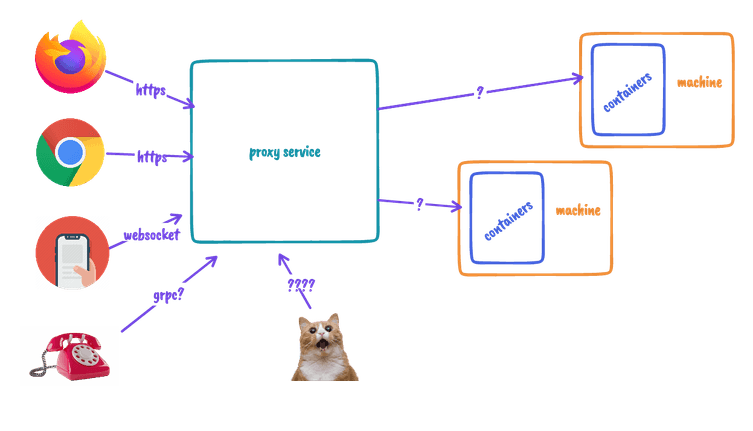

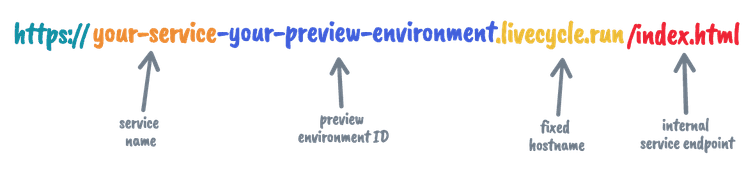

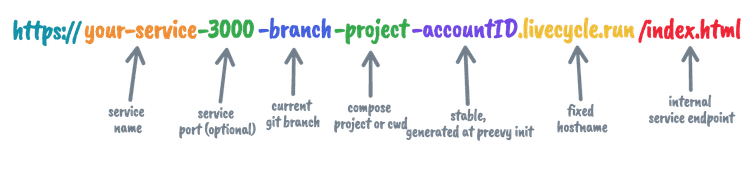

When you run Preevy, it sets up publicly accessible HTTPS URLs for all your Docker Compose services. The Preevy proxy service is responsible for routing HTTPS requests to the machines running on your cloud provider, which actually host your docker compose services.

The proxy service, like the rest of the preevy project, is Open Source (Apache License) and you can choose to host it yourself, or use our free hosted service at https://livecycle.run.

This was the first design draft we came up with.

Notice the question marks.

We had some question marks!

- How will different URLs map to specific services on the machines?

- How will requests be sent to the machines?

- How will incoming requests be routed in the machines to specific containers?

- Should we support only HTTP(s) services?

This blog post will focus on the first question.

First question: URL mapping is not trivial

Preevy users can deploy multiple preview environments, each containing multiple different docker compose services. Our goal is to have stable, human-readable URLs for each environment and service.

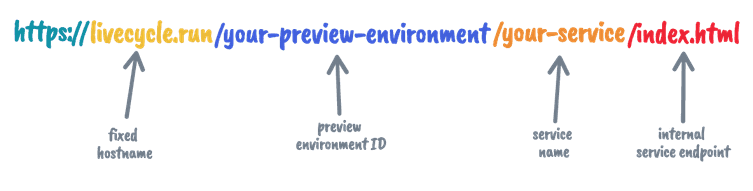

Our first idea: URL paths

The preview environment ID and service name, encoded in the URL path.

One problem with URL paths, though, is that some frontend apps assume that document.location is their root route, and might require additional configuration to work. Some servers like GRPC or MongoDB simply cannot work on non-root paths.

So, it seems like we have to encode ALL the information above in the hostname.

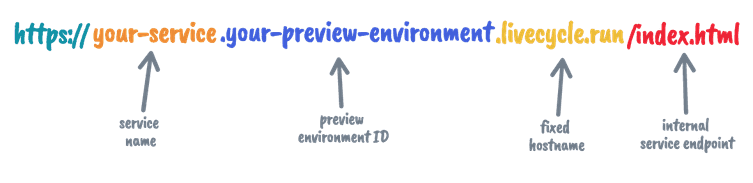

How about subdomains?

The preview environment ID and service name, encoded in subdomains.

Looks pretty, pretty good.

One problem with subdomains, though, is that we’ll need to register many TLS certificates. Although certificates for wildcard subdomains exist, they only allow a single level of wildcards.

✔️ *.livecycle.run

❌ *.*.livecycle.run

❌ *.*.*.livecycle.run

Theoretically we could register *.your-preview-environment.livecycle.run, but the “your-preview-environment” part changes per preview environment - so we’ll need to register certificates on demand. Let’s Encrypt limits certificate registrations to 50 per week per “registered domain” (livecycle.run, in this example). Other free certificate providers have even stricter limitations. And we didn’t want to go the paid route - even if we would pick up the tab for our hosted service, it would seriously limit our users’ ability to host the proxy themselves.

Single subdomain it is!

This way we can register *.livecycle.run and be done with it!

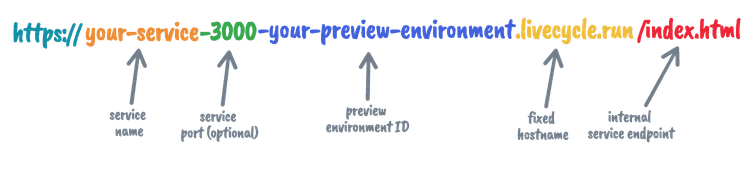

One problem with single subdomain, though, is that some services listen on more than one port.

Sigh.

Footnote: Actually, we ignored a bigger point - some services might not even be HTTP(s) - this is point 4 in the question list above. For now, let’s assume all requests are HTTP(s). The proxy service can expose a single port, 443, listen only to HTTPS requests, and route them to the services’ internal port on the machine.

OK, how about, for services with more than a single port, we add the port to the hostname?

Single subdomain with (optional) port!

We decided to omit the port for “simple” services which expose a single port.

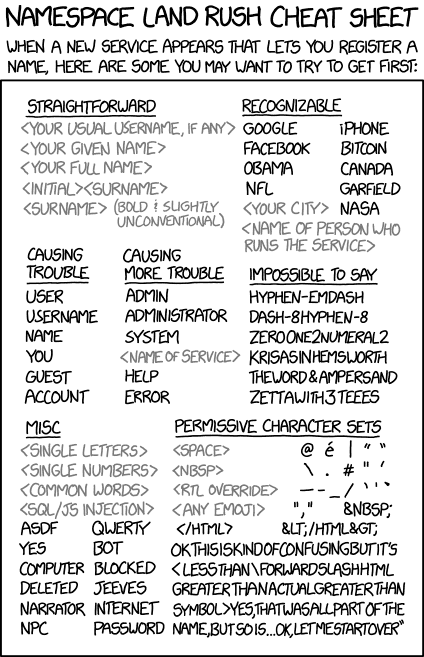

One problem with a single subdomain per port, though, is that the “your-preview-environment” part is determined by the user, which could lead to collisions.

We could add a registration phase to our service (and start a land rush!), but we wanted to KISS for now - anyone can start deploying preview environments, no registration needed.

credit: XKCD

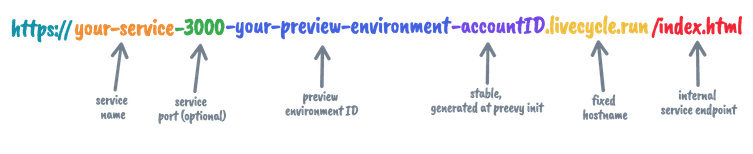

One solution would be to generate a random ID for each deployment, which would make the URLs unstable - they would change for every deployment, which could be confusing.

We decided to add an “account ID” suffix to the end of the hostname. The account ID would have to be randomly generated AND stable, and should require some “proof of ownership”, to protect against fraud/phishing/impostering - that is, deploying to an account ID which is not yours.

We ended up using another cryptographic secret, generated at the preevy init command, to base the account ID on.

More on the account ID on the next post, when we describe the connection between the proxy and the machines.

Wait, what is that “your-preview-environment” part anyway?

We call it “environment ID” or env ID for short. You could supply your own env ID by issuing

preevy up --id my-preview-environment

but since preevy is a tool for deploying preview environments, we should have sane defaults that describe the context. We decided to use the Git branch name (which is usually also associated with a pull request) along with the Docker Compose project name (or directory).

So:

Meaningful environment ID!

Running out of horizontal space here…

In the next posts, we’ll discuss the routing mechanism, connecting the machines to the proxy and our future plans for the proxy service.

Stay tuned!